2 Neuromeka, Seoul, Korea

3 Swiss-Mile Robotics AG, Zurich, Switzerland

2,3 The work was carried out during their stay at 1

* Corresponding author: joonho.lee@neuromeka.com

Paper link

Science Robotics Vol.9 adi9641 (2024), Free-access link

Preprint arXiv

ABSTRACT

Autonomous wheeled-legged robots have the potential to transform logistics systems, improving operational efficiency and adaptability in urban environments. Navigating urban environments,however, poses unique challenges for robots, necessitating innovative solutions for locomotion and navigation. These challenges include the need for adaptive locomotion across varied terrains and the ability to navigate efficiently around complex dynamic obstacles. This work introduces a fully integrated system comprising adaptive locomotion control, mobility-aware local navigation planning, and large-scale path planning within the city. Using model-free reinforcement learning (RL) techniques and privileged learning, we develop a versatile locomotion controller. This controller achieves efficient and robust locomotion over various rough terrains, facilitated by smooth transitions between walking and driving modes. It is tightly integrated with a learned navigation controller through a hierarchical RL framework, enabling effective navigation through challenging terrain and various obstacles at high speed. Our controllers are integrated into a largescale urban navigation system and validated by autonomous, kilometer-scale navigation missions conducted in Zurich, Switzerland, and Seville, Spain. These missions demonstrate the system’s robustness and adaptability, underscoring the importance of integrated control systems in achieving seamless navigation in complex environments. Our findings support the feasibility of wheeled-legged robots and hierarchical RL for autonomous navigation, with implications for lastmile delivery and beyond.

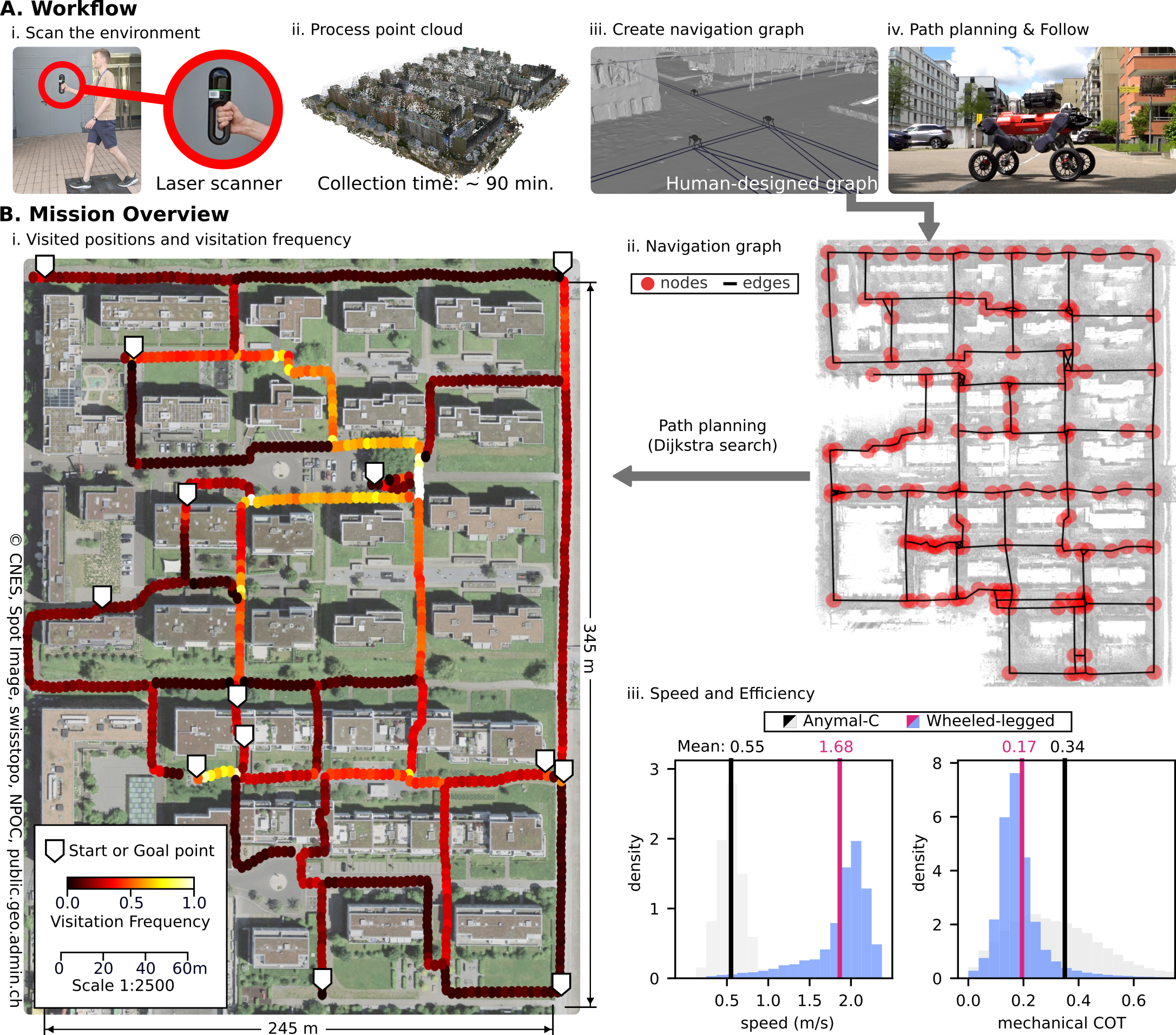

Kilometer-scale Autonomous Deployments

(A) Our city navigation workflow begins with offline preparation, involving scanning the test area using a handheld laser scanner and constructing a navigation graph. (B) The robot autonomously navigated the urban environment to reach 13 predetermined goal points, selected in an arbitrary order. (i and ii) Path planning within the city was facilitated by the pre-generated navigation graph. (iii) Moving speed and mechanical cost of transport compared to a normal legged robot (ANYmal-C).

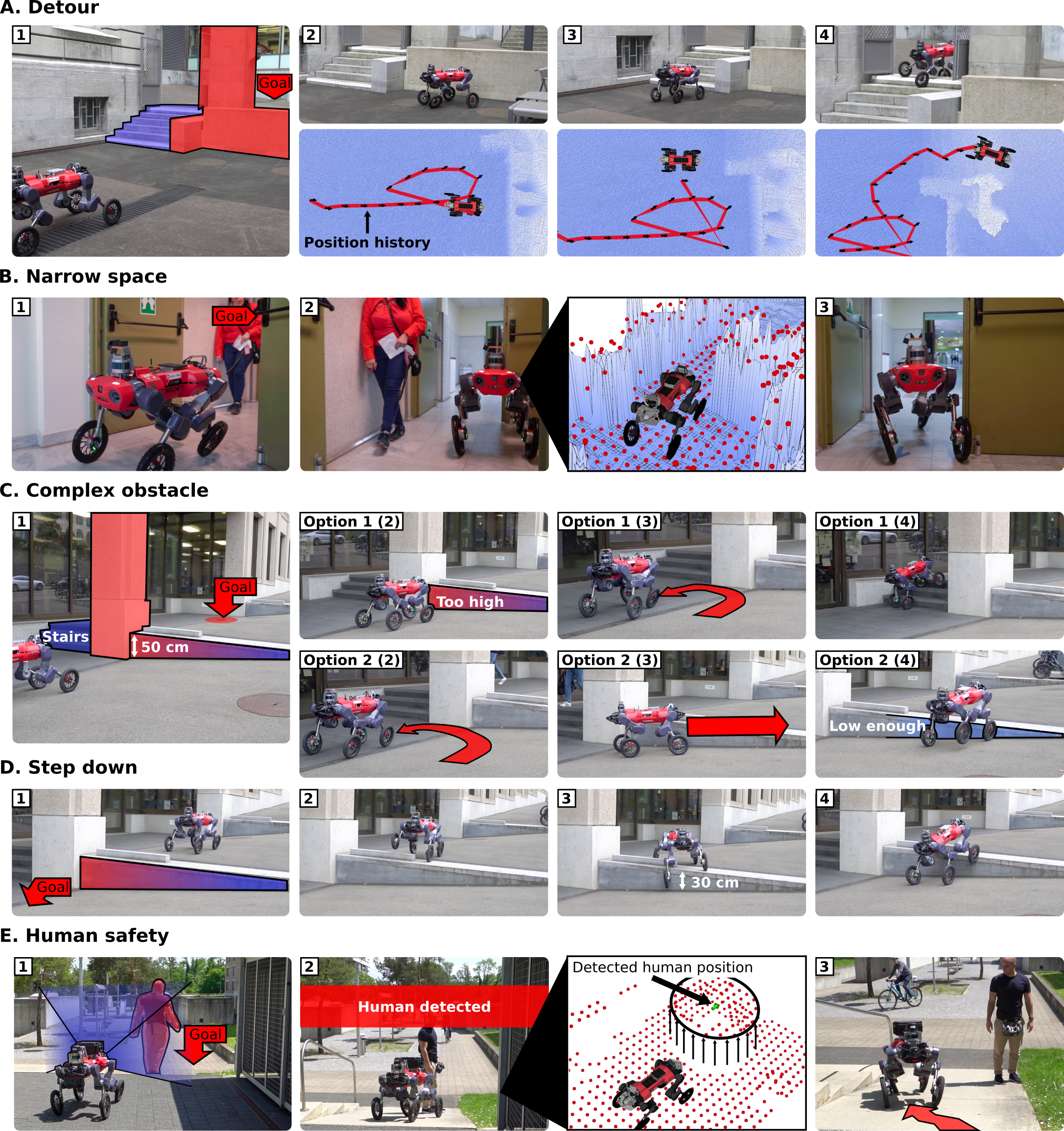

Intelligent Local Navigation

(A) Our robot navigates around blocked routes by actively exploring the area and finding alternative paths. (B) Safe traversal of a narrow space. (C)Our robot exhibits two different ways to traverse the complex obstacle. (C and D) Our robot shows an asymmetric understanding of traversability, being able to traverse higher steps when going down. (E) We ensure safety around humans by incorporating additional human detection and overriding height scan values.

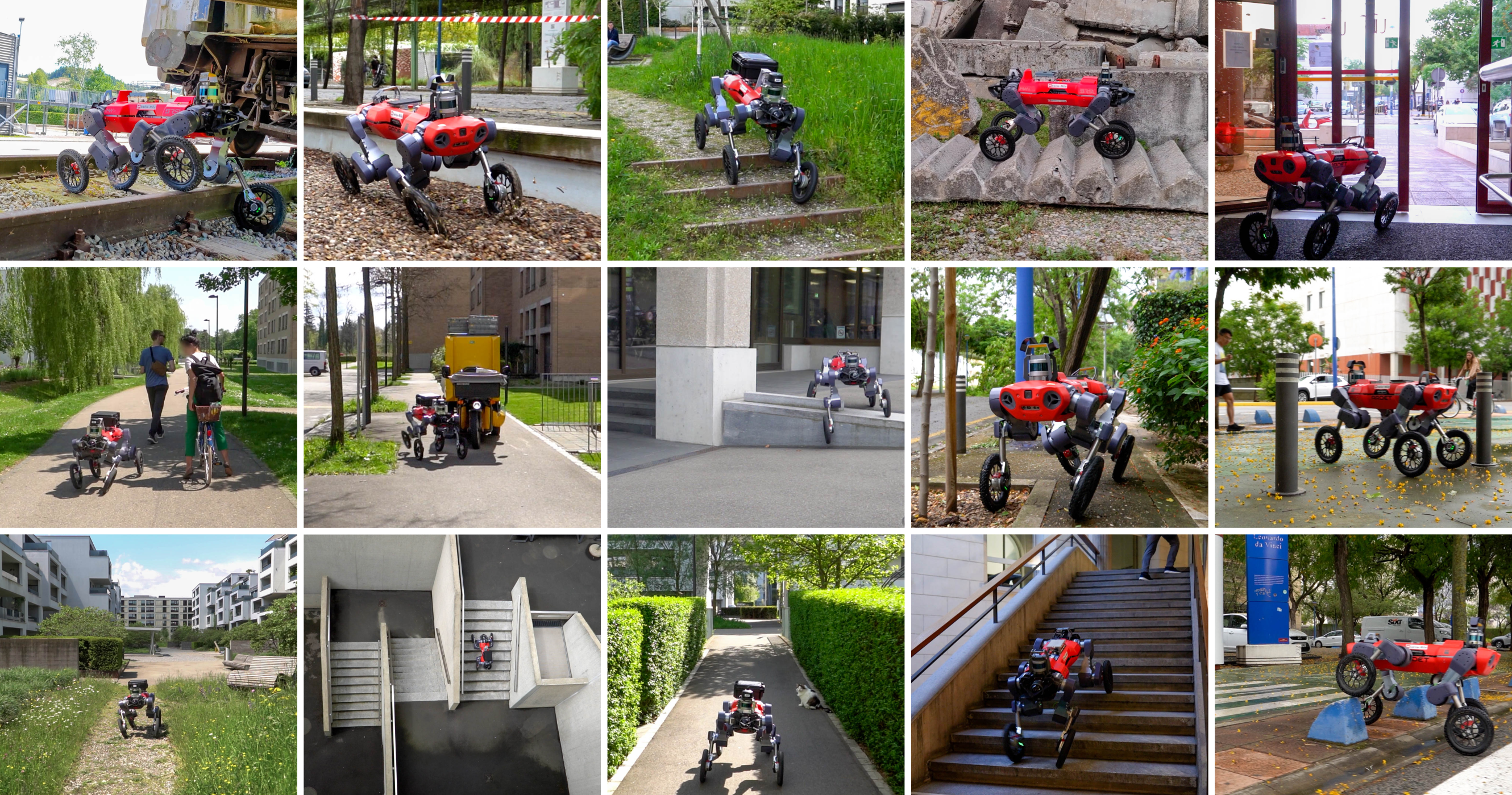

Terrain-adaptive Hybrid Locomotion

With model-free RL, we could develop a versatile locomotion controller that manifests adaptive gait transitions between walking and driving modes depending on the terrain.

Acknowledgment

Author contributions: J.L. conceived the main idea for the approach and was responsible for the implementation and training of the controllers. The high-level policy was trained by J.L., whereas M.B. developed the simulation environment for the low-level policy and also trained it. The navigation system and safety layer were collaboratively devised and implemented by J.L., M.B.,A.R., and L.W. Real-world experiments were planned and executed with contributions from A.R. and L.W. Initial setup of the low-level controller was facilitated by T.M. All authors contributed to system integration and experimentation.

Special thanks: We appreciate Maria Trodella for all the support during our work. We appreciate Turcan Tuna for helping us integrate Open3D SLAM, Julian Keller for the API integration, and Georgio Valsecchi for the hardware support.

Funding: This work was supported by the Mobility Initiative grant funded through the ETH Zurich Foundation, European Union’s Horizon 2020 research and innovation program under grant agreement numbers 101070405 and 101016970, Swiss National Science Foundation through the National Centre of Competence in Digital Fabrication (NCCR dfab), European Union’s Horizon Europe Framework Programme under grant agreement numbers 852044 and 101070596, and Apple Inc.